|

Matthew Lisondra

I am currently a Researcher of Robotics at the University of Toronto Robotics Institute.

My research focuses on Robot Perception, Robot Learning, Computer Vision, Simultaneous Localization and Mapping (SLAM), Autonomous/Intelligent Systems Algorithms, High Framerate Processing Low-Power Unconventional Sensing, 3D Scene Representations and Embodied AI.

Research Affiliations

I am affiliated with the following research labs:

Email /

LinkedIn /

Google Scholar

|

|

|

Education

UofT Robotics Institute, University of Toronto PhD, Doctor of Philosophy (Mechanical Engineering)

Robotics and Computer Vision Laboratory (RCVL) MASc, Master’s of Applied Science (Mechanical Engineering)

Physics, University of Toronto HBSc, Honours Bachelor of Science (Physics and Computer Science)

- Focus: Robotic Mechanics, Probability, TS-Analysis, Computational Physics

- Research: Time Series Analysis on Global Temperature, Sea Level Pressure

- Research: Helium-Neon Laser Analysis (Reviewed by Dr. A. Vutha)

- Research: Percolation via Random Processes Monte Carlo, Porous Rock

- Collaborated with: Dr. D. Jones of APCM Group

|

|

Selected Publications/Works

|

|

[1] SplatSearch: Instance Image Goal Navigation for Mobile Robots using 3D Gaussian Splatting and Diffusion Models

Siddarth Narasimhan12,

Matthew Lisondra1,

Haitong Wang1,

Goldie Nejat1

(1University of Toronto, 2SyncereAI)

IEEE Robotics and Automation Letters (RA-L), 2026

(In Review)

bibtex

/

Project Webpage

/

PDF

/

Video

/

Code

Introduced SplatSearch, a novel architecture for Instance Image Goal Navigation (IIN) in unknown environments

Leveraged sparse-view 3D Gaussian Splatting (3DGS) to reconstruct environments and render multiple novel viewpoints

Developed a View-Consistent Image Completion Network (VCICN), a multi-view diffusion model for inpainting missing regions in rendered views

Enabled viewpoint-invariant goal recognition by combining geometry-based rendering with diffusion-based inpainting

Proposed a frontier exploration strategy that fuses semantic context from goal images with visual context from synthesized views

Allowed robots to prioritize frontiers that are both semantically and visually relevant to the search target

Outperformed state-of-the-art IIN methods in Success Rate and Success Path Length in photorealistic and real-world environments

|

|

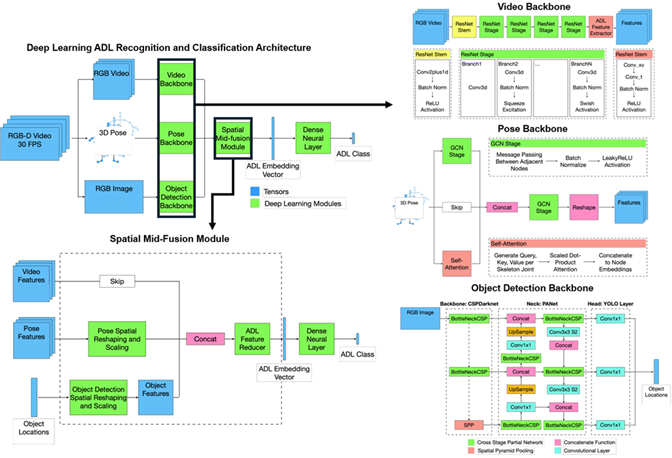

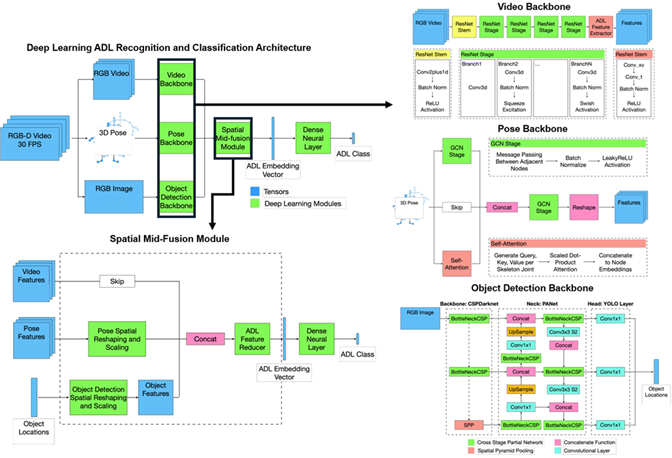

[2] PovNet+: A Deep Learning Architecture for Socially Assistive Robots

to Learn and Assist with Multiple Activities of Daily Living

Matthew Lisondra*1,

Souren Pashangpour*1,

Fraser Robinson2,

Goldie Nejat1

(1University of Toronto, 2Resolve Surgical)

Taylor & Francis, Advanced Robotics, 2026

Special Issue on Robot and Human Interactive Communication

(In Review)

Developed the first multimodal deep learning system for multi-activity ADL recognition in SARs

Introduced ADL and motion embedding spaces to detect known, unseen, and atypical ADLs

Proposed a user state estimation method using similarity functions for real-time ADL inference

Enabled proactive HRI by allowing SARs to autonomously initiate context-aware assistive behaviors

Overcame false positives and limited generalization in prior ADL recognition systems

Achieved higher classification accuracy than state-of-the-art human activity recognition models

Validated in cluttered home-like environments using the socially assistive robot Leia

Adapted to diverse users by recognizing variable and non-standard ADL execution styles

|

|

[3] Inverse k-visibility for RSSI-based Indoor Geometric Mapping (In Review)

Matthew Lisondra*1,

Junseo Kim*2,

Yeganeh Bahoo3,

Sajad Saeedi4

(1University of Toronto, 2TU Delft, 3TMU, 4University College London)

Autonomous Robots, 2026

(In Review)

bibtex

/

Project Webpage

/

PDF

Presents a novel technique capable of generating geometric maps from WiFi signals

A novel algorithm that is capable of generating geometric maps using WiFi signals received from multiple routers

Benchmarking the WiFi-generated maps with Lidar-generated maps by comparing the area,

number of data points, RSSI prediction True/False setting, RSSI accuracy percentage, IOU and MSE scores

Evaluation on real-world collected from indoor spaces

|

|

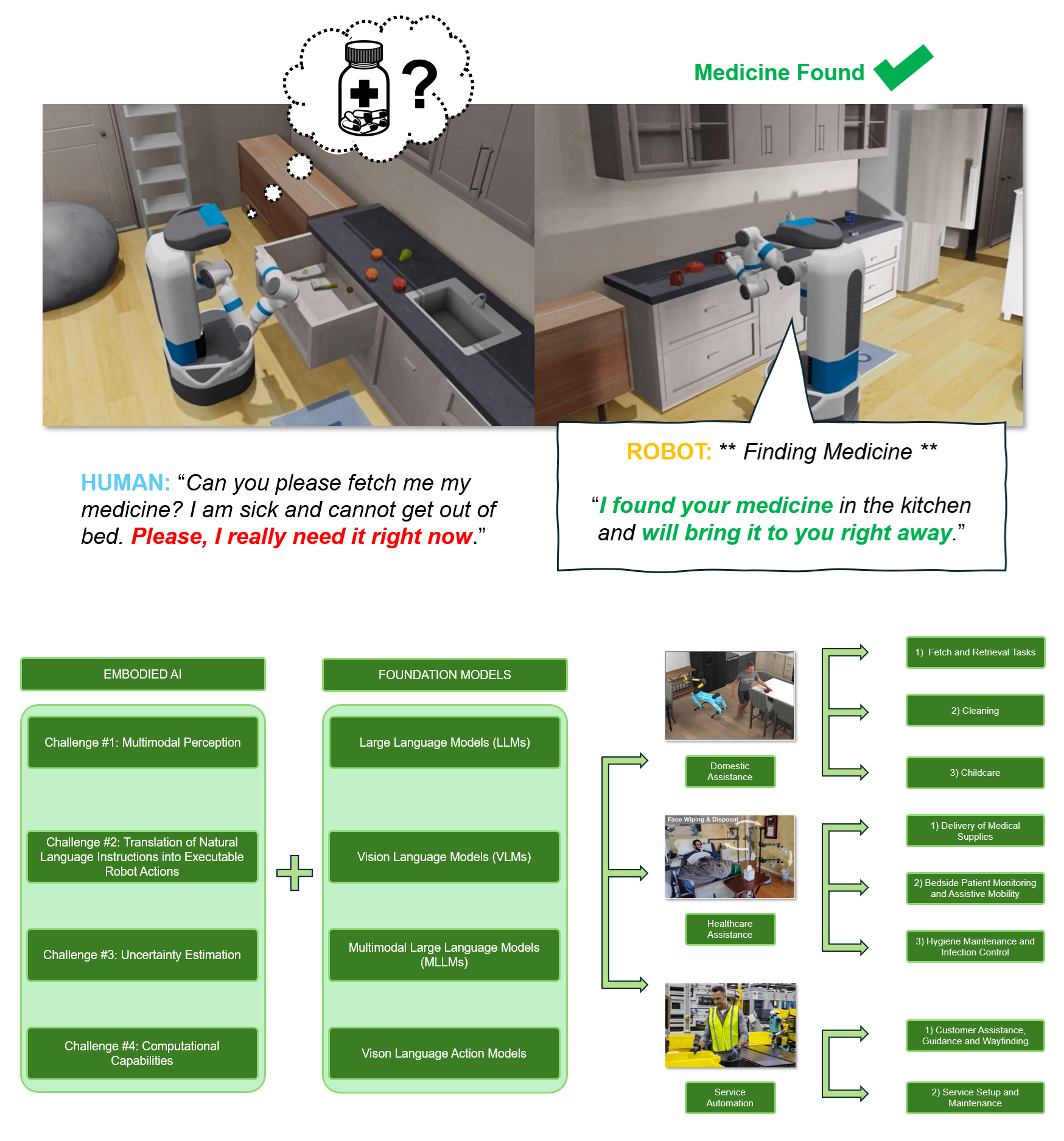

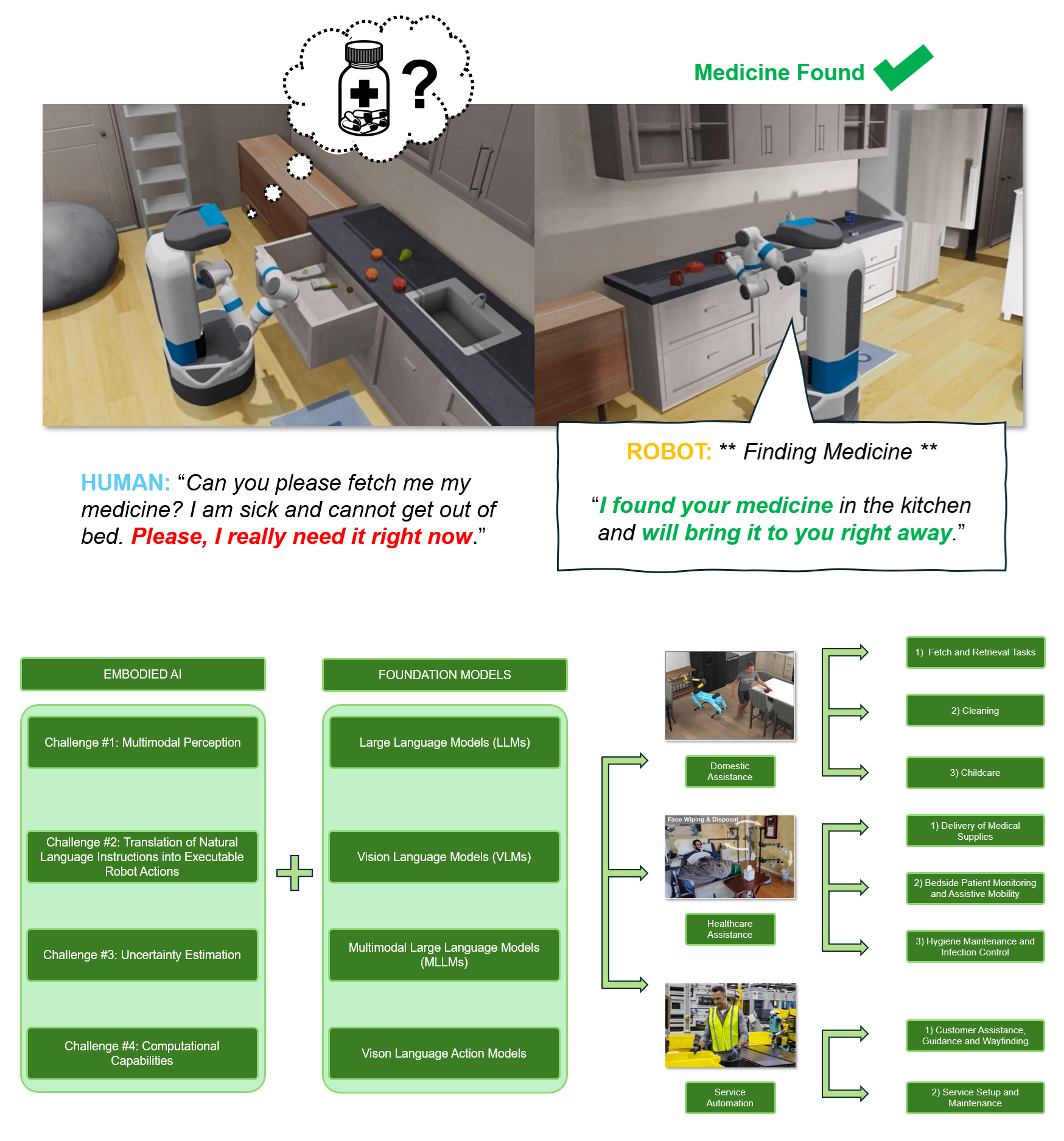

[4] Embodied AI with Foundation Models for Mobile Service Robots: A Systematic Review

Matthew Lisondra1,

Beno Benhabib1,

Goldie Nejat1

(1University of Toronto)

Robotics, 2026

Special Issue on Embodied Intelligence: Physical Human–Robot Interaction

bibtex

/

PDF

Presents the first systematic review of foundation models integrated into mobile service robotics

Identifies and categorizes the core challenges in embodied AI: multimodal sensor fusion, real-time decision-making, task generalization, and effective human-robot interaction

Analyzes how foundation models (LLMs, VLMs, MLLMs, VLAs) enable real-time sensor fusion, language-conditioned control, and adaptive task execution

Examines real-world deployments across domestic assistance, healthcare, and service automation domains

Demonstrates the transformative role of foundation models in enabling scalable, adaptable, and semantically-grounded robot behavior

Proposes future research directions emphasizing predictive scaling laws, autonomous long-term adaptation, and cross-embodiment generalization

Highlights the need for robust and efficient deployment of foundation models in human-centric robotic systems

|

|

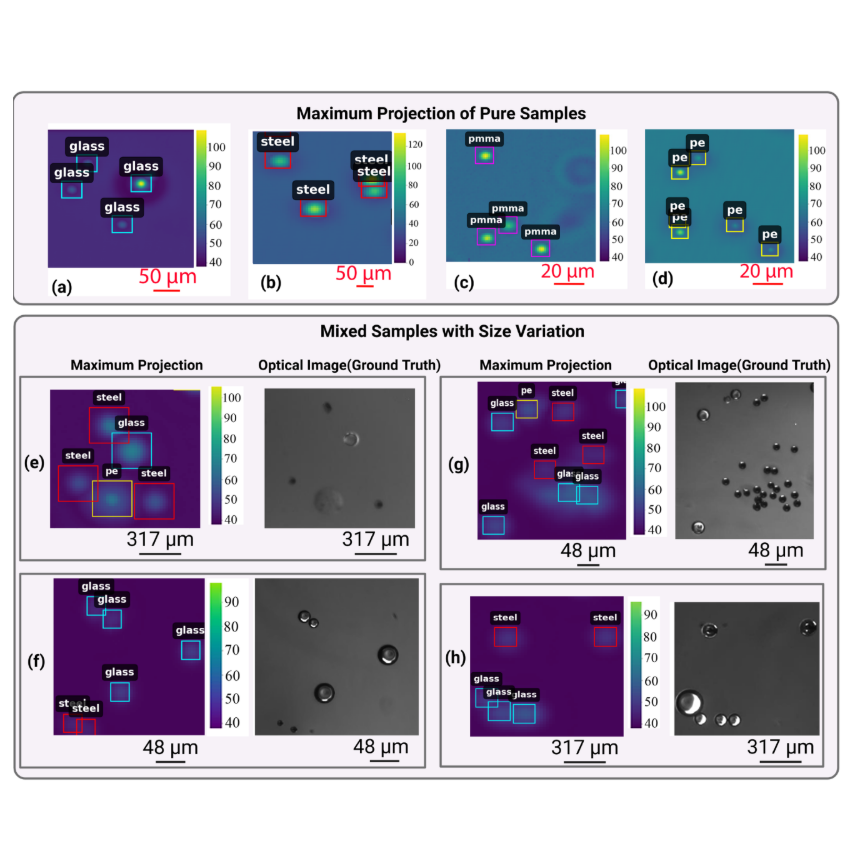

[5] High-Frequency Ultrasound Combined with Deep Learning Enables Identification and Size Estimation of Microplastics

Navid Zarrabi2,

Eric Stohm3,

Hadi Rezvani4,

Matthew Lisondra1,

Nariman Yousefi4,

Sajad Saeedi5,

Michael Kolios3

(1University of Toronto, 2TMU, 3TMU Department of Physics, 4TMU Chem Eng, 5University College London)

Nature, npj Emerging Contaminants, 2026

bibtex

/

PDF

/

Code

Developed a high-frequency ultrasound-based method for microplastic detection and sizing

Designed a peak extraction technique to isolate strong backscatter signals for analysis

Achieved 99.63% material classification accuracy using a custom 1D-CNN (PE, PMMA, steel, glass)

Performed size estimation with a material-specific MLP achieving 99.97% accuracy

Combined classification and sizing into an integrated machine learning pipeline

Provides a fast, low-cost, and automated alternative to traditional spectroscopic methods

Validated on a custom acoustic dataset, enabling potential for real-time in-situ monitoring

|

|

[6] TCB-VIO: Tightly-Coupled Focal-Plane Binary-Enhanced Visual Inertial Odometry

Matthew Lisondra*1,

Junseo Kim*2,

Glenn Takashi Shimoda3,

Sajad Saeedi4

(1University of Toronto, 2TU Delft, 3TMU, 4University College London)

IEEE Robotics and Automation Letters (RA-L), 2025

IEEE International Conference on Robotics and Automation (ICRA), 2026

bibtex

/

Project Webpage

/

PDF

/

Video

Designed the first tightly-coupled 6-DoF Visual-Inertial Odometry for FPSPs (TCB-VIO)

Operates at 250 FPS with on-sensor binary edge input and 400 Hz IMU integration via MSCKF

Introduced binary-enhanced KLT tracker for robust and smooth feature tracking on SCAMP-5

Reduces latency and power via direct on-sensor SIMD processing with limited pixel memory

Outperforms VINS-Mono, ORB-SLAM3, and ROVIO under aggressive motion and fast dynamics

Achieves long, stable feature tracks in real-world tests with motion-capture ground truth

Extends OpenVINS tightly-coupled architecture for binary descriptors and high-speed tracking

|

|

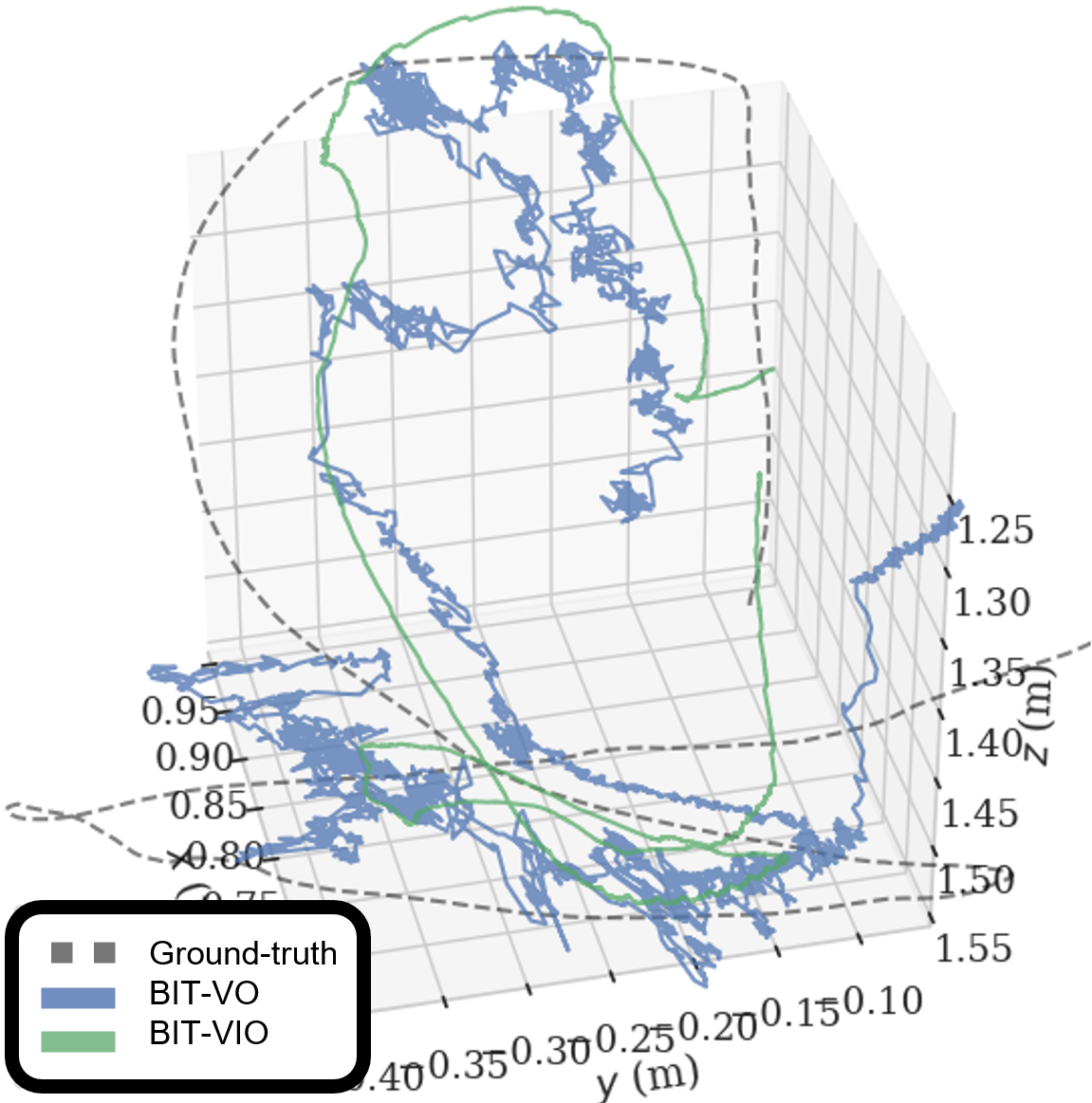

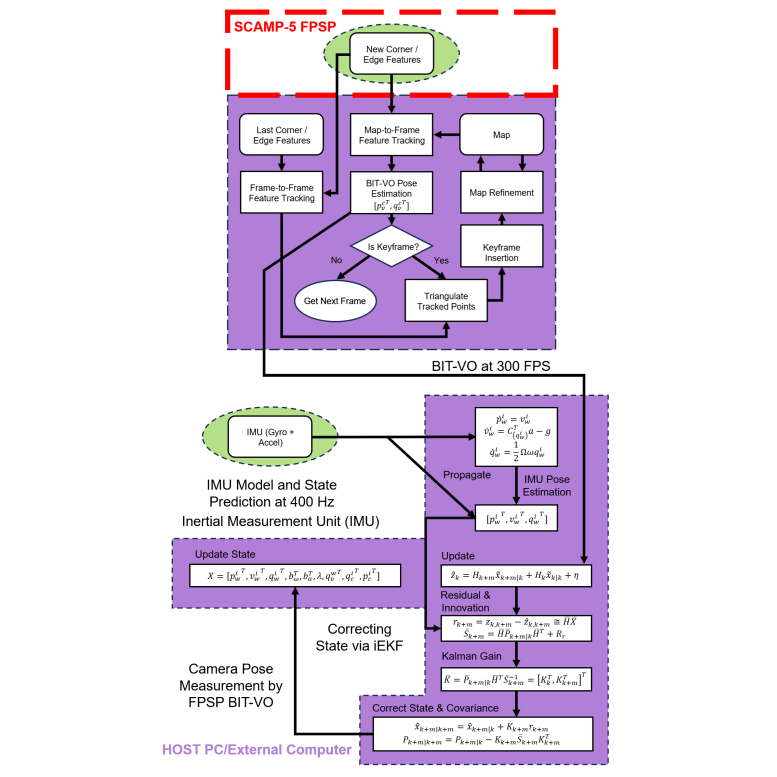

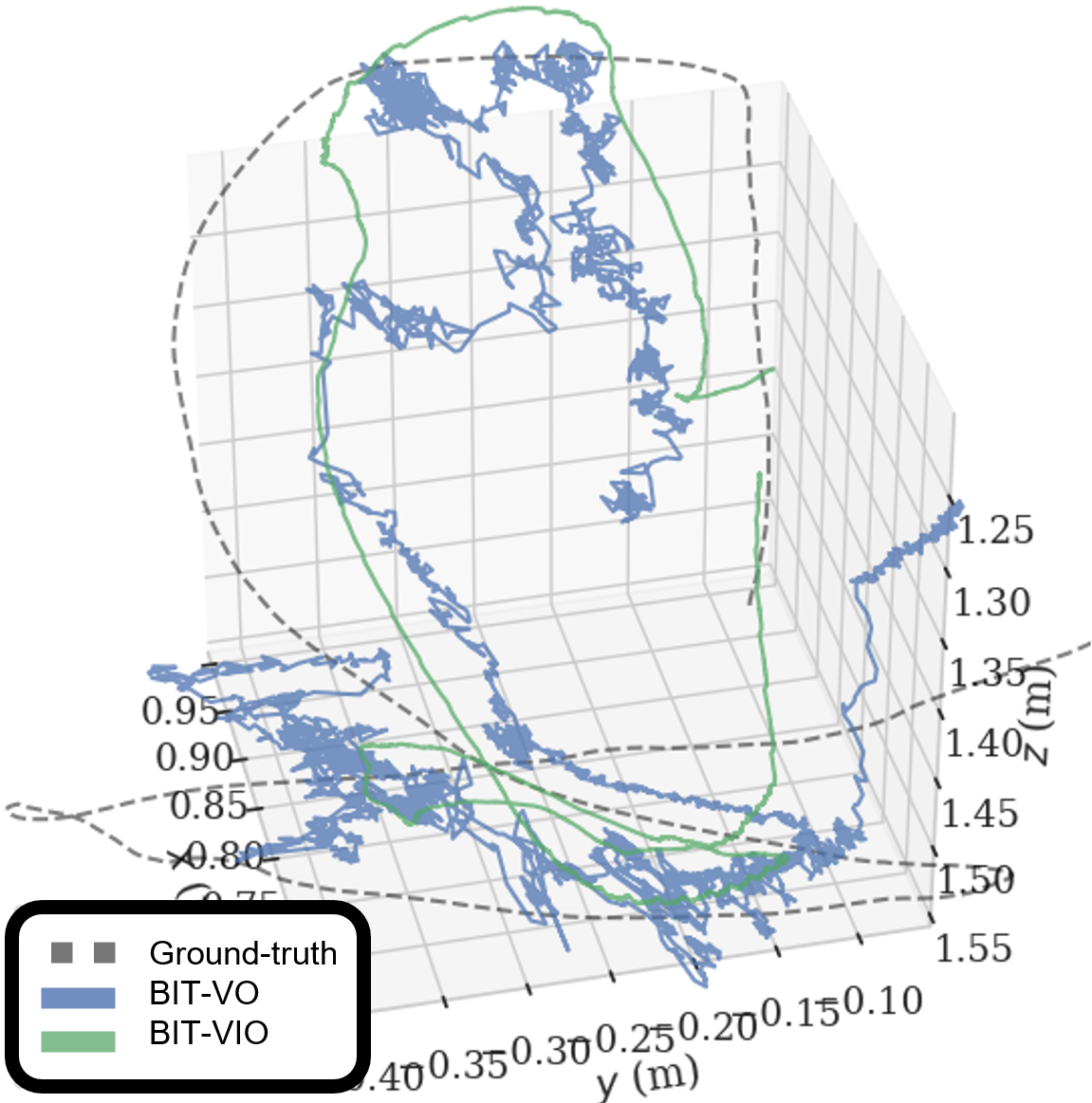

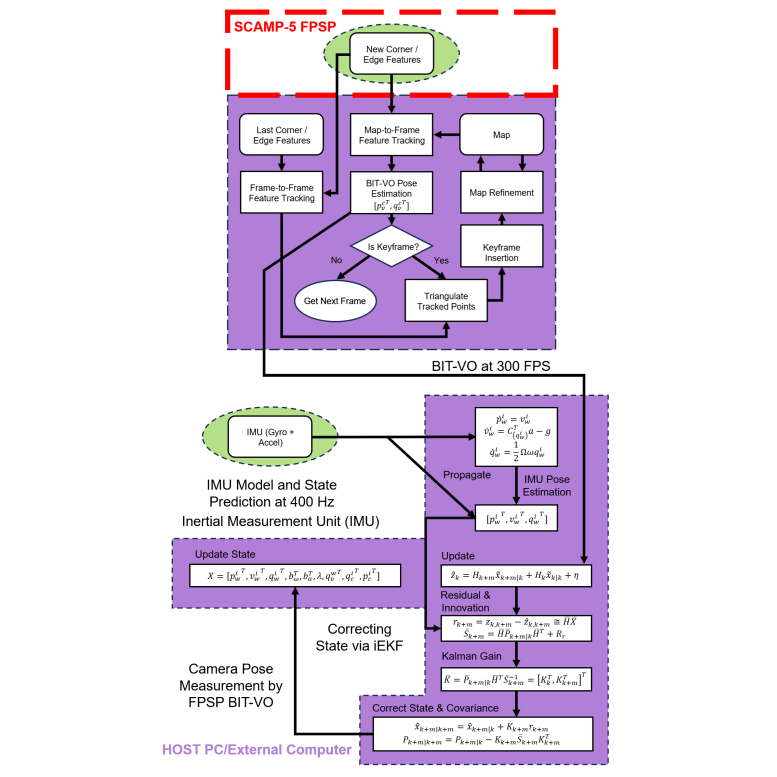

[7] Visual Inertial Odometry using Focal Plane Binary Features (BIT-VIO)

Matthew Lisondra*1,

Junseo Kim*2,

Riku Murai3,

Koroush Zareinia4,

Sajad Saeedi5

(1University of Toronto, 2TU Delft, 3Imperial College London, 4TMU, 5University College London)

IEEE International Conference on Robotics and Automation (ICRA), 2024

bibtex

/

Project Webpage

/

PDF

/

Video

/

Presentation in Yokohama, Japan

Designed the first 6-DOF Visual Inertial Odometry on FPSPs (BIT-VIO)

Efficient VIO operating and correcting by loosely-coupled sensor-fusion iEKF at 300 FPS

using predictions from IMU measurements obtained at 400 Hz

Uncertainty propagation for BIT-VO's pose as it is based on binary-edge-based descriptor extraction

Extensive real-world comparison against BIT-VO, with ground-truth obtained using a motion capture system

|

|

[8] Focal-Plane Sensor-Processor-Based Visual Inertial Odometry

Matthew Lisondra*1,

(Select Committee: Dr. Guanghui (Richard) Wang,

Dr. Aliaa Alnaggar)

(1University of Toronto)

Thesis, 2024

2024 Canadian Society for Mechanical Engineering (CSME) Thesis Award

bibtex

/

PDF

Studied the usability and advantages of FPSPs to leverage a more accurate state estimation framework

Designed an algorithm for VIO using Focal-Plane Binary Features

Implemented the FPSP vision- IMU-fused estimation algorithm on a mobile device for offline and online real-world testing

Evaluate the performance, benchmarking against FPSP vision-alone and ground-truth data

Extensive study on the algorithmic execution timing/frame, accuracy, memory usage

and power consumption of the visual front-end processing performance on the FPSP

|

Organizations

- Reviewer (Conference) for ICRA 2026, IEEE International Conference on Robotics and Automation (ICRA)

- Reviewer (Journal) for ISJ 2025, IEEE Sensors Journal (ISJ)

- Reviewer (Journal) for RA-L 2024, IEEE Robotics and Automation Letters (RA-L)

- Reviewer (Journal) for EAAI 2026, Engineering Applications of Artificial Intelligence

- Reviewer (Conference) for RO-MAN 2025, IEEE International Conference on Robot and Human Interactive Communication (RO-MAN)

- Reviewer (Conference) for IROS 2025, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)

- Reviewer (Conference) for ICRA 2024, IEEE International Conference on Robotics and Automation (ICRA)

- Reviewer (Conference) for IROS 2024, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)

- Reviewer (Conference) for IEEE CCECE 2023, IEEE Canadian Conference on Electrical and Computer Engineering

- Reviewer (Conference) for IROS 2023, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)

|

|

Teaching

I am affiliated with several teaching institutions, teaching Physics (Advanced) and Computer Science. Select students I have taught:

- H. Li

- L. Liang

- N. Feng

- R. Sun* (Multiple Scholarship Offers in Australia, England)

- W. Zheng

- A. Zhang

- A Huang* (Now at Berkeley Music School)

- B. Zheng

- B. Luo* (Full Scholarship Physics, University College London)

- B. Yang* (Now at King's College London)

- C. Yang* (Now at Durham University)

- F. Chen

- G. Ye* (Now at University of Hong Kong)

- J. Wu

- K. Sheng, Chuwen (Multiple University Offers)

- L. Lin

- N. Zhao* (Multiple University Offers in Business)

- N. Tian

- A. Pu

- D. Qiu

- M. Wang

- R. Zhang

- C. Zou

- Y. Caelon

- S. Yumo

- A. Ma

- A. Xu

- K. Chen

- F. Xin

- A. Yi Lan

- C. Yi

- F. Chen

- L. Li

- M. Di

- R. Zhang

- S. Fan

Thesis Mentoring/Guidance:

Junseo Kim (Now at TUDelft Robotics)

Autonomous Truck Navigation with Trailer Integration via Natural Language Processing (NLP)

More information as well as students taught can be found on my Curriculum Vitae (CV).

|

|